By Moule Lin

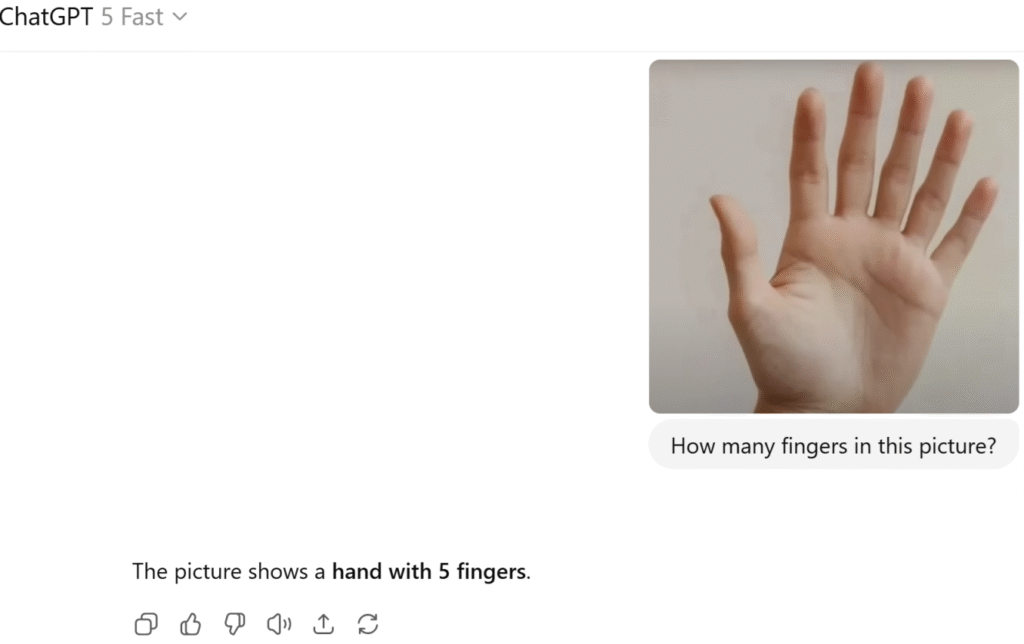

When using AI, it is often helpful or necessary to have an estimation of the uncertainty of the results, as they are sometimes overconfident. As an example, consider that we ask a multimodal model how many fingers it sees in the image of a hand:

However, due to the underlying mathematical models, the estimation of uncertainty with existing approaches is computationally expensive.

Hence, our research project on Efficient Uncertainty Estimation (EUE) aims to devise techniques for efficiency-first uncertainty checks that estimate how unsure the model is, in an efficient fashion. One approach is to compress the “heavy” parts of uncertainty so we keep the useful signal with less need for time-intensive calculations.

In Summary:

- Problem: AI can sound confident when it’s actually unsure (as an example, consider hallucination in large language models).

- What we add: A tiny, built-in uncertainty signal that runs in a single pass that has no slow re-runs or big extra inference.

- Why it matters: You get answers and a simple “confidence badge” (high / medium / low) without hurting speed.

1. What is Efficient Uncertainty Estimation?

Efficient Uncertainty Estimation is a way to reduce the computation needed to determine “how sure” an AI is. Instead of doing lots of extra work when you ask a question, we design the model so it can provide a reliable indicator of the uncertainty while producing the answer.

Imagine that next to any output the model produces, there is a little indicator that turns red, yellow, or green depending on the model’s certainty about that result.

As another example, consider a model that gets images from a car’s video camera and has to classify each pixel according to categories like car, road, traffic sign, and pedestrian, see the following screenshot (based on a dataset from [1]):

At the boundaries where the image transitions between, for instance, a road and a car, the uncertainty will be higher since the model is less confident about pixel classifications. This is indicated by pixel color, white means higher uncertainty.

2. How Does the Approach Achieve Its Efficiency?

- Share what’s redundant [2]

Big model weights are redundant. We merge similar pieces so the model carries less baggage but keeps the same information to sense when something “seems off”. - Project information on certainty/uncertainty into a smaller space

Instead of tracking uncertainty everywhere, we keep a compact internal indicator focused on the most telling signals. That’s how we get a single-pass uncertainty score, which indicates that the model is not so sure about this answer.

FAQ

Does this make AI underconfident or overconfident?

No—our goal is actually to get a good estimate (that is calibrated well): when it says “70% sure,” reality should match that, not more, not less [3][4].

Will it slow responses?

No—Our approach is built for single-pass checks [5], so the uncertainty comes “almost for free” with the answer.

Do I need special hardware?

No—if anything, it’s more hardware-friendly because it reduces extra compute.

Summary

Efficient Uncertainty Estimation provides a trustworthy indicator for the uncertainty of the results, with reduced computational effort. This is particularly useful in domains where incorrect results can have severe negative consequences, for instance, self-driving cars or medical diagnosis.